Sometimes I get questions from friends who are struggling with the term machine learning. How does a machine actually learn?

Well to answer that question, we need to understand what data analytics, or data science, is.

I can go on and on about data analytics, but simply put, my definition, data analytics is about describing a set of data in the most general way possible so we can make decisions or predictions.

We can use statistics to describe a data - what is the mode of the data? What is the mean? How about the variance and standard deviations?

We can also use statistics to test a new data to see if it belongs to the data we already have.

We can draw a best-fit line (linear regression) and use statistics (mean squares) to see if it is indeed ‘best-fit’. We can try to group data together by features (colours, size, etc).

So we can see, data analytics consist of two parts - statistics and going about deploying the statistical methods.

Well to answer that question, we need to understand what data analytics, or data science, is.

I can go on and on about data analytics, but simply put, my definition, data analytics is about describing a set of data in the most general way possible so we can make decisions or predictions.

We can use statistics to describe a data - what is the mode of the data? What is the mean? How about the variance and standard deviations?

We can also use statistics to test a new data to see if it belongs to the data we already have.

We can draw a best-fit line (linear regression) and use statistics (mean squares) to see if it is indeed ‘best-fit’. We can try to group data together by features (colours, size, etc).

So we can see, data analytics consist of two parts - statistics and going about deploying the statistical methods.

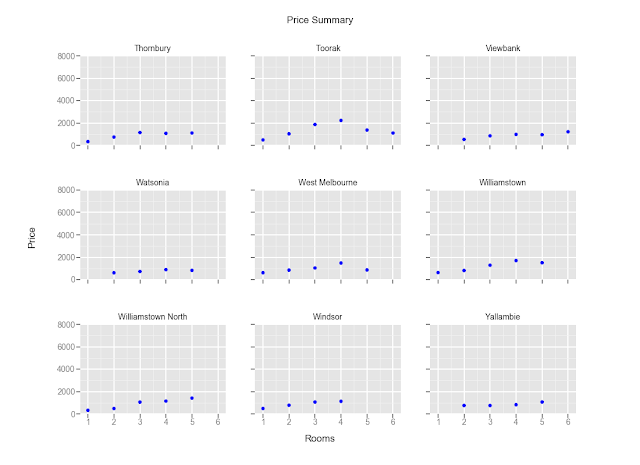

|

| How can we describe the data? |

Machine Learning is one of the tools for the latter part, the deploying statistical methods or other means (grouping, namely) to describe data. Of course, we can deploy the methods manually - we can try to draw lines and derive the equation of the line manually. However with the rapid growth in the size of data, doing so is becoming humanly impossible. Furthermore, computers are faster and less prone to mistake. (And hence the term data science was born.)

In short, machine learning is the use of computers and algorithms to describe data. However this is done not by explicitly coding the logic, but through recursive methods to find the optimal set of model parameters that minimizes inaccuracy (or maximises accuracy, I will explain myself for this clumsy wording soon).

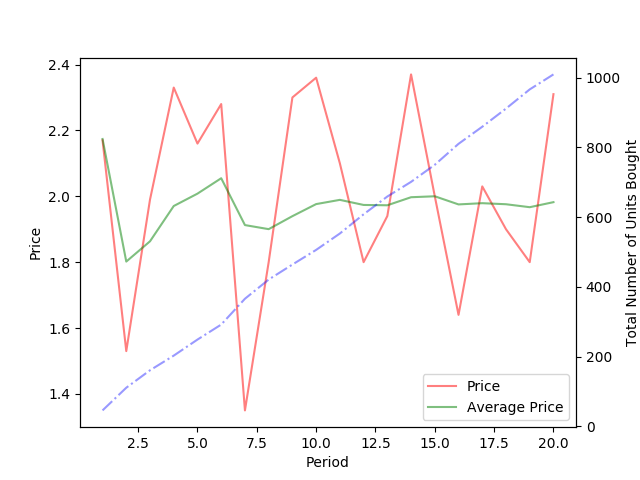

In statistics, computing the mean squares error (MSE) is one of the ways to calculate the amount of error. The objective of the fitting line is to minimize the MSE, so that the fitted line can be used to make predictions.

In computer science speak, inaccuracies are costs described by a cost function that curiously looks like a MSE function. All machine learning algorithms (or methods) aims to minimize this cost functions.

I will elaborate in the next post.

Meantime, these are some of the resources (free!) that I helped me learn machine learning

In short, machine learning is the use of computers and algorithms to describe data. However this is done not by explicitly coding the logic, but through recursive methods to find the optimal set of model parameters that minimizes inaccuracy (or maximises accuracy, I will explain myself for this clumsy wording soon).

In statistics, computing the mean squares error (MSE) is one of the ways to calculate the amount of error. The objective of the fitting line is to minimize the MSE, so that the fitted line can be used to make predictions.

|

| A fitted line, but does it have minimum MSE? |

In computer science speak, inaccuracies are costs described by a cost function that curiously looks like a MSE function. All machine learning algorithms (or methods) aims to minimize this cost functions.

I will elaborate in the next post.

Meantime, these are some of the resources (free!) that I helped me learn machine learning

Video Lectures on Statistical LearningThis is a series of video lectures based on the book Introduction to Statistical Learning (or ISR for short). You can download a free PDF copy of the book. Statistical learning is the statistician-speak of machine learning (which is a computer-scientist-speak). It covers most of the machine learning in a statisician point-of-view. I find it beneficial to go through this. You can also find this course in Stanford's Lagunita website. It is free!

Machine Learning by Andrew Ng on CourseraThis is like the de-facto go-to course to learn about the machine learning. Ng will go through the intuition behind the common machine learning algorithms. You will learn about matrix/vector multiplication (alot of it!). You will learn to use Matlab or Octave. From this, machine learning is nothing but a chunk of matrix multiplication. Nevertheless, IMO, it is a course worth the buck to get the certificate from Coursera. Ng also has a deep learning course that I am currently learning from.Feel free to air your comments!

~ZF